Story ideas can emerge anywhere, and this one came from a panel at the Investigative Reporters & Editors conference, where investigative reporter Garance Burke first heard about unusual problems tied to a popular artificial intelligence-fueled transcription tool called Whisper. Her co-panelist, New York University Professor Hilke Schellmann, privately shared her emerging AI research on how this tool, meant to faithfully note down everything that people said, was instead making things up out of thin air. The fruitful partnership between Schellmann and Burke produced last weekend’s investigation into Whisper, which tech behemoth OpenAI has touted as having near “human level robustness and accuracy.” Instead, more than a dozen computer scientists and developers told AP that Whisper is prone to inventing chunks of text or even entire sentences, including racial commentary, violent rhetoric and even imagined medical treatments. Despite this major flaw, Burke and Schellman found Whisper is being used in a slew of industries worldwide to translate and transcribe interviews, generate text in popular consumer technologies and create subtitles for videos. Even more concerning: its application in hospitals.

Photographer Seth Wenig and interactive producer Karena Phan’s creative visual work heightened the story’s reach, and broke open new ground for AP’s AI accountability coverage.

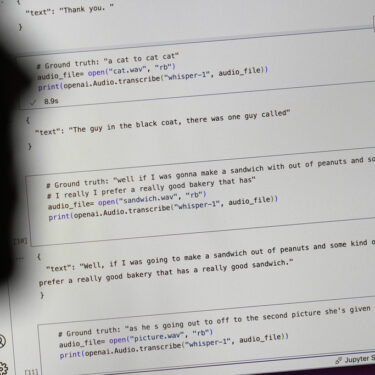

Wenig found creative ways to illustrate the stark differences between what people said and what the AI tool noted down. Phan helped to create a comprehensive social plan that amplified buzz around the story, with @AP X posts getting 3.1 million views and nearly 3,000 retweets. It also generated more than 100,000 page views.

Visit AP.org to request a trial subscription to AP’s video, photo and text services.

For breaking news, visit apnews.com.